I’m a complex-adaptive-system respecter. This essay is about complex-adaptive-system deniers, and how they practice their craft.

This is my framework for assessing risks, and what can and cannot be modeled.

Modeling the Un-modelable

I like models. As in modeling used to predict things. Our society is better for them. In fact, everyone in a way likes models. You like them for what they help you avoid: uncertainty. Humans would rather have certain bad news than uncertain news. Models attempt to give a lens into the future, to make it predictable, mitigating the future’s volatility by helping us navigate it better.

BUT… some things cannot be modeled. The complex, the emergent…. man we really hate those pesky Nth-order effects. And the mids REALLY hate being told their models are bad, or even harmful! But we still try to model it anyways.

I’m a model respecter for many things. For example: investing. But not all investing…

You want to show me a DCF model for your Walmart or insurance company thesis? Great, would love to see it. Those have a history to reference, reliable income figures, predictable cash flows and growth, and can be modeled with confidence. We have a precedent we can see and inputs we can inject with high probability of predicting the future. Good model.

However, I am not interested in seeing your DCF of Cloudflare. Or Nvidia. Or Ethereum. I also would be completely uninterested in seeing your model of Amazon in 2006. I don’t care how astute and well-researched it was, because you completely missed AWS.

And it’s not your fault, no one could predict a business better than Amazon would sprout out of Amazon. I bet a model of Yahoo and Google in 2003 would have given the nod to Yahoo. Technology investing is a winner-take-most, power-law-on-steroids game that incorporates the oscillations of human behavior, trends, and impulses. Technology adoption, emergent social behaviors, S-curves…. your hubris is a sight to behold if you find yourself predicting these things with confidence.

In the domain of technology and growth investing, your financial model is wrong. You are using numbers to tell your story because it feels more objective to you. It is no less a fiction or guess than if you wrote it in Excel or Word. It can give a good sense of potential outcomes and ways to think about things, but the accuracy is non-existent.

Growth tech investing (what crypto is too) has to factor in emergent S-curves and power-law dynamics. It has to predict human adoption trends and new behaviors manifesting. You can’t model these things. I’m so sorry.

Know your limits. Humans are not physically or technologically capable of modeling complex adaptive systems. I am a respecter of complex adaptive systems. I find those who attempt to model them under the false pretense of controlling them dangerous. Pic related.

Complex adaptive systems have numerous variables you must inject and extrapolate to try to predict the future. These extrapolations, by and large, are complete guesses.

They can however be educated, thoughtful guesses. I’m not smearing humanity’s attempt to learn and navigate our world better. But again, some humility is needed. These are guesses.

And it’s not “what’s Walmart’s revenue modeled 5 years out” guesses. That’s not a guess, that is a forecast. Actuarial calculations, a steady-state growth company, you can make some remarkably accurate forecasts with these tools (insurance companies confirm). Those are not guesses, those are genuine calculations. They are calculations because they are repeatable, reproducible, and predict the future well. When you can do this, you can model it. If it does not replicate or predict the future, it is not science, and it is not a model worth much of anything.

You cannot model complex adaptive systems (CAS). Those that try to do so, I believe should be called CAS deniers. Climate change denial? No, you are a CAS denier. Rationalism is well-known for CAS denialism.

CAS have like 20 different inputs (that you know of) and 4 different Chesterton’s Fences in them that will produce about 14 different 2nd-order effects, 21 different 3rd-order effects, and 11 different 4th-order effects you didn’t even know could happen.

CAS deniers manipulate the word "science" to advance false claims that they can predict the unpredictable. Model the un-modelable.

In religion, we call those "prophets".

A theme with complex-adaptive-system deniers is a penchant for "models" that predict doom predicting doom lets them advance control, under a guise of "safety". It invariably results in more political power. Curious!

In religion, we call this "eschatology".

Models for CAS that I disrespect: the economy, the climate, and any ecosystem with a massive amount of independent and dependent inputs and outputs. Before 2020 I wouldn’t have bucketed epidemiology in here, but it’s a worthy new addition to the “you really don’t know what you’re talking about, do you?” team.

Are you making a prediction of more than three years for a complex adaptive system? Please see your way out. Thank you.

I’m a proponent of a Talebian approach to these systems. You do not put these things under your thumb, and you embed fragility in your attempts to control them. You will try to stifle volatility with your modeling, because all humans innately hate uncertainty. But you don’t stop volatility, you just transmute it.

The AI Doomer Excuse for Control

You may be able to already tell my stance on AI doomers. I view them as a particularly upsetting form of hubris and misunderstood threat models.

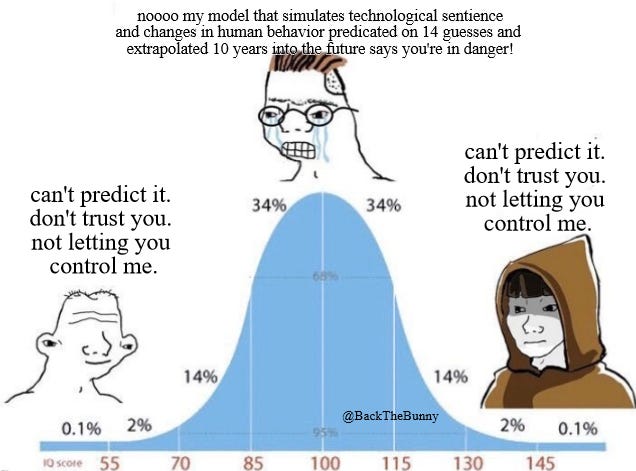

This is functionally what the doomer decelerationist thesis is predicated on:

Every AI-misalignment precept/extrapolation is based on a series of guesses, let’s say these have around a 5% accuracy rate (and I think I’m being generous with that confidence interval).

Now imagine how that accuracy compounds 8 guesses deep. Input .05^8 into your calculator. It can't even fit on the screen.

The reality of the incredibly microscopic feasibility of the assumptions you’re making is remarkable. The probability of anything close to your AGI storm trooper conclusion is infinitesimally small.

And now, you use that as justification to exert political control over the machine.

So when I hear your 7-extrapolations-deep sci-fi paperclip maxxxing situation, I’m sorry but you’re giving me an Amazon DCF calculation in 2003. Oh and also your model thinks Amazon may become sentient and sterilize small children. I’m sorry I don’t read science fiction for either analysis or pleasure.

What’s the more likely threat here? Government authoritarianism over the most important, truth-telling technology of our time? Or an evil AI Sauron’s Eye?

What’s the track record of governments and abuse, terror, or just general unbridled incompetence? Has that ever happened before? Do we have any precedent we can point to? ¯\(ツ)/¯

The horde of lawyers and power-motivated bureaucrats, that’s who you want to mitigate this Star Trek threat of yours?

You want to cede control of the most-powerful, impactful technology of our time to them to protect for your .000000045% Sci-Fi scenario? Unequivocally, no.

AI doomers have a very poor understanding of pragmatic, actual risks. They exist in the Sci-Fi clouds of microscopic Terminator risks and beckon in almost certain threats by inadvertently allowing AI to be weaponized by government employees to avoid their fantasy threats.

AI has a MUCH higher probability of being lethal if left in the hands of these middling and psychopathic personality types that disproportionately occupy these positions of power.

AI has a far greater likelihood of being dangerous in government’s control than it does in the hands of genuinely brilliant engineers trying to create a transcendent technology for humanity.

Thus, I’m uninterested in any AI eschatological model for the end of times. I know most mean well, but I don’t find them to be worth the paper they’re printed on. And it will be a breeding ground of justification for those aiming for theocratic capture of AI.

Pdoom fairytales are exploited by those who seek to control. They will use your well-meaning predictions to justify an “emergency” in which it just so happens this scary technology is safest in their hands, and government expands accordingly. It’s funny how those darn models always seem to trend one way in what they conclude and end up doing for government power...

Recognize the patterns and reject the real, concrete threat. Do not be a CAS denier.

Subscribes and shares are very much appreciated. If you enjoyed the essay, give it a like.

You can show your appreciation by becoming a paid subscriber, or donating here: 0x9C828E8EeCe7a339bBe90A44bB096b20a4F1BE2B

I’m building something interesting, visit Salutary.io

Related essays:

Midrange Jumpers for the Middle Class

This chart made me sad. It’s the inexorable result of moneyball. When every decision is maximally maximized, when every choice is an expected-value calculation.